A few weeks ago I attended an

Open Source Jam where the topic was "building blocks." I gave a lightning talk about why the combination of Atom and Webhooks is changing the way web applications interoperate. In this set of blog posts I'd like to flesh out that 5 minute presentation and explain how Atom is potentially a universal payload format for the web in the same way that byte streams are a universal payload format for Unix.

- Part 1: Communicating with atoms1

- Part 2: Webhooks

- Part 3: JSONistas and XMLheads

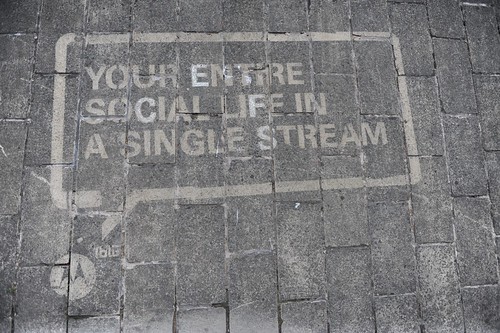

Here's the problem. I have N different web apps that I want to connect to each other in arbitrary ways. Some of these web apps don't exist yet and some of them will be written by people I don't know who won't ask permission before they connect their web apps to mine.

In an enterprise environment we'd solve this using some kind of common messaging infrastructure or/and a universal data format. We would then deal with the inevitable pain as the evolution of the different web applications broke compatibility or locked the entire system into moving at the pace of the slowest team.

On the web we don't have that luxury. But Atom can help.

The

Atom specification includes a number of simple-looking features which solve complex problems and open up the possibility of using Atom entries as a universal payload format for interoperability across the web.

The basic structure of an Atom document requires either an

atom:feed or

atom:entry as the top level element. Apart from that you must have an atom:id, an atom:author, atom:link, atom:title and an atom:updated

2. Everything else is optional.

Since Atom is based on XML it has support for namespaces. This makes it possible for you to take an atom:entry and enrich it with new tags that only make sense in the context of your application. For instance the Activity Streams specification adds lots of new tags. However it also uses

lots of tags from other specifications. It extends Atom and re-uses Atom Threading Extensions, Atom Media Extensions, xCal, PortableContacts and GeoRSS. The feed ends up looking like this:

But what if you just want to treat that ActivityStream or any other Atom extension as if it were a simple Atom feed?

Atom Processors that encounter foreign markup in a location that is legal according to this specification MUST NOT stop processing or signal an error. It might be the case that the Atom Processor is able to process the foreign markup correctly and does so. Otherwise, such markup is termed "unknown foreign markup".

When unknown foreign markup is encountered as a child of atom:entry, atom:feed, or a Person construct, Atom Processors MAY bypass the markup and any textual content and MUST NOT change their behavior as a result of the markup's presence.

When unknown foreign markup is encountered in a Text Construct or atom:content element, software SHOULD ignore the markup and process any text content of foreign elements as though the surrounding markup were not present.

Well the Atom specification insists that an Atom processor, like your web app, 'must ignore' foreign markup in an Atom feed unless it is sure it knows what to do with it. This means that we can have multiple different but interoperable versions of Atom or any other XML language floating around at the same time. Tim Bray called this

"an unstated axiom of the World Wide Web" and I agree with him that this simple rule allows "multidirectional growth" since anyone can extend Atom without asking permission from a central authority or worrying too much about versioning. I also agree with Sam Ruby when he says that

"90% of all namespaces are crap" but since we can't tell which namespaces will become popular and which will be ignored we should let anybody and everybody have a go.

The above makes it sound like we're entering into a happy, fun world of atomic interoperability and distributed extensibility. However you will eventually want to re-publish an atom:entry from another feed. Then you'll realise that the compulsory elements I listed above aren't enough. If you're building a tool like

Planet Venus or

Streamer you're going to need to generate an Atom feed containing entries from other websites. These sites may be using various different extensions and id generation schemes. The spec says that you should preserve the atom:feed's original

atom:id inside the

atom:source if the atom:feed was the top-level element.

This way you can have an atom:id that points to your copy of the atom:entry and an atom:source which points to the original atom:entry so that if someone then re-publishes part of your feed we can still find out the provenance of the atom:entry.

The atom:source 'points' to the original source of the atom:entry and instead of making your own atom:id you use the "permanent, universally unique identifier" that the author(s) of the atom:entry assigned.

The above sounds complicated but it means we can treat Atom entries as

a loosely joined chain of small pieces that can be

piped,

filtered and aggregated with web-based equivalents of the standard Unix tools. By simply publishing a feed containing these pieces I know that other people's tools can consume, re-mix, compose and syndicate my content in ways I can't even think of.

The

simple blog aggregators we've been building are just the beginning and in part 2 I'll talk about how

Webhooks will let us go beyond feeds and start thinking in terms of low-latency streams.

Photo by http://www.flickr.com/photos/codepope/

Footnotes:

Photo by http://www.flickr.com/photos/codepope/

Footnotes:

1- Thanks to Sheila Thomson for the title.

2- These tags may be optional or compulsory depending on which other tags you're using.

Updated 2010/05/03:

Bob Wyman has pointed out some mistakes in the original version of this article. Firstly atom:source elements may contain elements from the atom:feed not the atom:entry. Secondly atom:id elements are permanent and universally unique identifiers which don't have to be URLs so it's mis-leading to talk about them pointing anywhere. Finally atom:source is not about the

provenance of an atom:entry, since that would require tracking all the locations it went through before you saw it, but about the point of origin. See

here for more details from Bob.

Photo by http://www.flickr.com/photos/codepope/

Photo by http://www.flickr.com/photos/codepope/